Traditional machine vision employs user-configured preset inspection rules and static programming code to ensure the quality of products. The inspection rules and code are derived from descriptions and samples of the product’s attributes and defects. Let’s classify traditional machine vision as static algorithms that contain user-configurable rules and tools. The tools simplify the creation of machine vision routines, including edge detection, blob analysis, histogram, and character recognition.

The inspection outcome is classified as acceptable or unacceptable, based on the criteria being inspected. The algorithms are relatively fast to develop and require a low level of computing power, so classic machine vision is preferred wherever possible. But what happens when the defining characteristics of a product defect are broad, random, and highly variable? A static machine vision algorithm may no longer be the appropriate tool if it can’t dynamically adapt or take into account all the variation.

In these situations, the final decision on product compliance will require intervention from human operators. Unfortunately, relying on human inspection methods will be problematic over time, especially for the critical-to-quality attributes used to validate manufacturing process control (as in the case of medical device assembly). Fully manual inspections pose various issues, including training, turnover, and human subjectivity/error.

Fortunately, there is a machine vision solution that addresses the problems arising from inadequately defined, poorly understood, or complex product defects. An AI-based approach, using machine learning techniques, can accurately replicate the human assessment and decision process when distinguishing between acceptable and unacceptable product.

Ways to Automate Visual Inspection

Manual and automated manufacturing can’t be successful without a robust inspection and verification methodology. The reasons for implementing an inspection and verification regimen can include:

- Product quality assessment of in-process and/or finished product

- Diagnostics that collect information that monitor machines and/or product

- Continuous improvement

- Serialization

- Counting, verification, and reconciliation

- CGMP and regulatory compliance

- Measurement and metrology

- Stakeholder and procedural requirements

- Safety

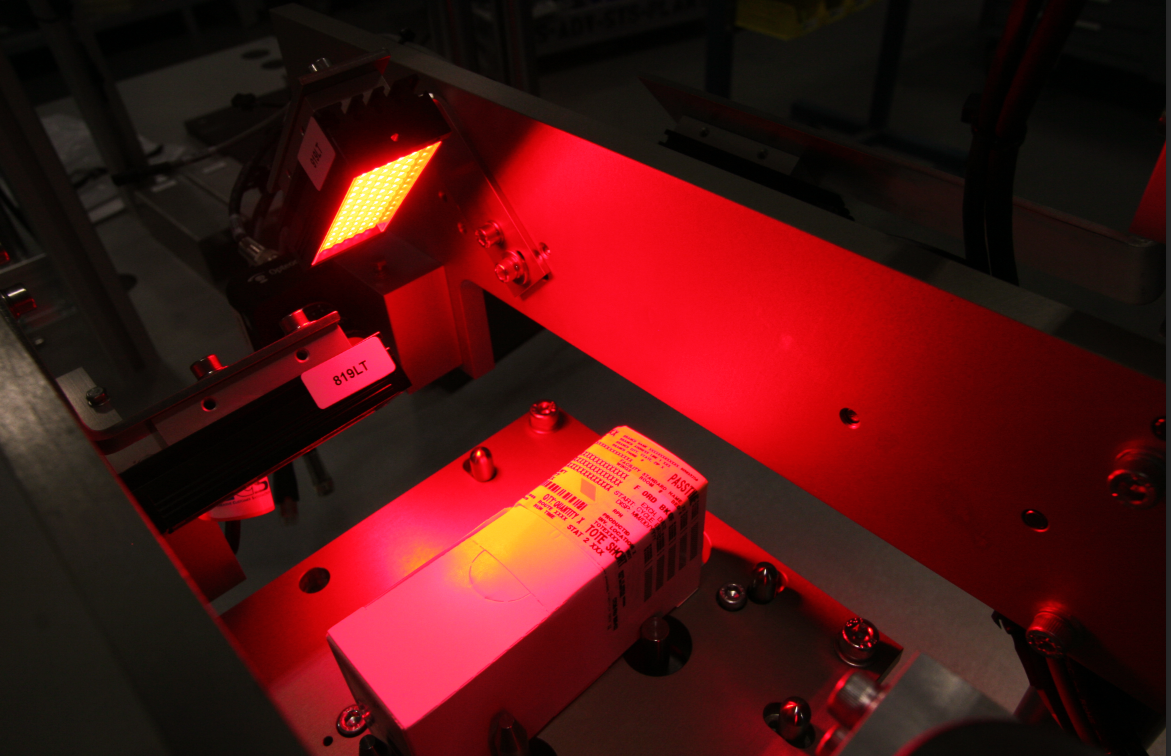

Vision system performing an in-process label inspection

Automated visual inspection (AVI) systems help ensure that results are consistent, devoid of subjectivity/bias, properly logged, and traceable. This data, and due diligence, are critical to demonstrate quality control and assurance — as well as a way to provide evidence during internal, external, and regulatory audits.

Fully automatic inspections have the advantage with respect to consistency and human resources; however, they can be expensive from an upfront capital, implementation, and validation perspective. For instance, they might require

- Costly upfront hardware and software development investments

- Shutdowns during installation, training, and maintenance

- Complex logic systems that must be validated at every development stage

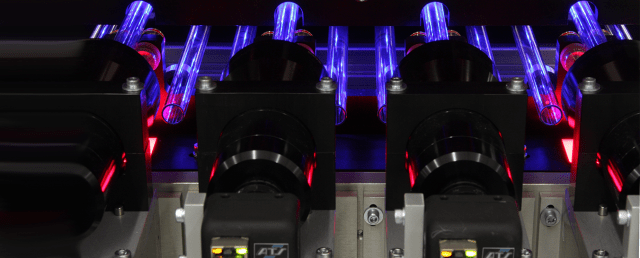

Series of telecentric lenses performing a high-accuracy inspection

Thus, let’s focus on a hybrid model that shifts from inspecting parts directly to the manual inspection of images of the parts instead. A hybrid inspection model includes automated processes that utilize manual inspection decision-making. As such, they have many benefits of automated processes without the drawbacks of implementing fully automated systems. Additionally, hybrid inspection models are low risk, transitional solutions for organizations looking to gradually move from manual processes to fully automated ones.

The Transition from Manual to Automated Visual Inspections

A hybrid model helps meet inspection needs that would not be met with manual operators or traditional machine vision tools. The hybrid model is rolled out in phases, the first of which occurs in parallel with existing operator inspections. This phase works as follows:

- Implement cameras that take images of the product from the same viewpoint as that of the inspecting operator

- Correlate the decisions made by the operators with the corresponding images taken by the cameras

- Remotely review the stored images and further classify them into additional defect categories

Alternatively, this process can occur in real time and at the rate of production — in the way that airport luggage inspections are performed during ongoing security checks. Cameras take x-ray images of the luggage as they move along a conveyor; the operator views the image and accepts, or declines, the luggage based on known criteria. The advantage of the initial phase of a hybrid solution is that it combines the innate ability of human decision-making and the use of computer cameras. As a result, you can:

- Trace the quality ruling of every image presented to an operator

- Verify machine inspection and machine learning tools simultaneously

- Archive images of passed/failed parts

- Train employees and AI systems using the stored images

- Generate trend data sets for future analytics

This setup also offers the ability to relocate operators, which can be located outside of the cleanroom or manufacturing area (as is the case for medical device inspection).

Video demonstrating the part handling point of view

Moving from Hybrid to Fully AI-Based Inspection

In the second phase of the hybrid model, attention turns to the development of an AI-based vision algorithm. This requires a well-curated set of collected images that have been manually classified as acceptable or unacceptable. The unacceptable images are further classified into categories that provide more detail as to why they are unacceptable. Each inspection can provide classification and confidence level. These confidence levels enable a full spectrum of inspection routing capabilities that facilitate a manual, hybrid, or automated workflow.

These classified images can then be analyzed by AI — specifically machine learning techniques — to determine underlying patterns and features specific to each defect category. The result is an algorithm developed from empirical, human-evaluated data.

An advantage of AI-based algorithms is their ability to continually learn and refine their logic; however, this approach is incompatible with the regulatory demands of the life sciences industry. Thus, an additional step needs to be included to create static, validated releases of the AI algorithm/model.

Initially, it’s best to continue with the operator as an inspection arbiter until confidence is established in the AI’s automated judgements. This enables convergence of the operator’s knowledge with static traditional machine vision solutions. Without this step, machine vision solutions can’t adapt to the inspection variability of the defect conditions, but with the validated algorithm, an automated vision system can make determinations about each reject.

An Available Machine Vision AI Solution

The camera system, vision data management system, and the application of AI-based algorithms to detect and classify rejects are components of a complete solution offered by ATS Automation. Illuminate Manufacturing Intelligence™ and ATS SmartVision™ comprise the software platforms at the heart of this solution. Illuminate is an Industry 4.0 smart manufacturing data collection and analysis system while ATS SmartVision provides a full machine vision development environment for the acquisition and analysis of images. This solution has been deployed on numerous life sciences automated systems. The need for a hybrid machine learning model came as a result of working with manufacturers that were either concerned about the cost and risk of implementing automated vision systems, or reluctant to continue to rely solely on human capital for inspections. The ATS AVI solutions deployed offer a highly configurable system to answer these concerns.

Please contact an ATS representative to discuss your product needs

Contact Us

Contact Us  Subscribe

Subscribe  LinkedIn

LinkedIn  Youtube

Youtube